Hyper-V NIC Teaming: everything you need to know

In this article, you will find out:

- how to use the Hyper-V NIC Teaming

- which version of Windows NIC Teaming was allowed for Hyper-V Virtual Machines

- everything about Hyper-V NIC Teaming

- VMFS Recovery™ requirements for VMware backup (VM)

Are you ready? Let's read!

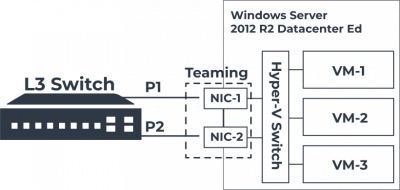

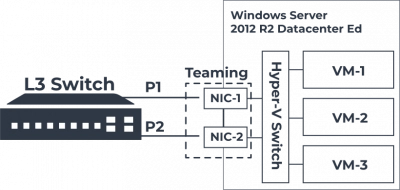

Setting up network connections is one of the most important aspects of Hyper-V configuration. This covers network adapters, cabling, virtual switches, traffic flows, and uplinks, among other things. If the Hyper-V network setup is set up incorrectly, issues might arise right away. This can result in difficulties with performance, availability, or stability.

Administrators may customize Hyper-V for a variety of use cases and configurations since it comes with extensive network capabilities out of the box. The ability to form NIC teams is a feature of both Windows Server and Hyper-V. Teaming NICs has long been a feature of Windows Server deployments, providing enhanced network capacity and fault tolerance for network connections to Windows Servers. Microsoft has offered these functionalities natively in current versions of Windows.

We'll talk about the following topics in this post:

What are the advantages of NIC teaming in Hyper-V for Hyper-V administrators? What is the setup for this? What are the applications?

What is Windows Server Native NIC Teaming?

Many Windows Server administrators depended significantly on third-party network card suppliers to offer their modified Windows driver that enabled teaming capability in previous versions of Windows. For Windows Server administrators, this was often a challenge because it necessitated fully testing new driver releases or combating bad driver releases that caused network difficulties due to teaming. Furthermore, because of the third-party drivers, you had to contact your network card manufacturer for help if you had any problems.

Microsoft has introduced the ability to establish network teams natively in the Windows Server operating system from Windows Server 2012. This eliminates many of the issues that administrators experienced with third-party drivers and programs used to construct production network card teams. Microsoft supports native teams built using Windows Server native teaming, and in general, Windows Server native teaming has shown to be a considerably more stable technique for forming production network teams.

Tip: learn how to clone VM in Hyper-V!Let's talk about Windows Server NIC Teaming in details

The native NIC Teaming feature in Microsoft lets you to join up to 32 physical Ethernet network adapters into one or more software-based virtual network adapters or teams. For production workloads, this provides fault tolerance and better performance. A few things to keep in mind with Windows Server NIC teams:

- May contain 1-32 physical adapters

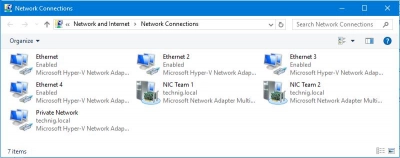

- Aggregated into Team Nics or tNICs which are presented to the OS

- Teamed NICs can be connected to the same switch or different switches as long as they are on the same subnet/VLAN

- Can be configured using the GUI or PowerShell

NICs that cannot be placed into a Windows Server NIC team:

- Hyper-V virtual network adapters that are Hyper-V Virtual Switch ports exposed as NICs in the host partition

- The KDNIC – kernel debug network adapter

- Non-Ethernet network adapters – WLAN/Wi-Fi, Bluetooth, Infiniband, etc

Advanced Network settings that are not compatible with NIC Teaming in Windows Server 2016:

- SR-IOV – Single-root I/O virtualization allows data to interact with the network adapter directly, without involving the Windows Server host operating system, thus bypassing this middle layer of communication

- QoS capabilities – QoS policies allow shaping the traffic traversing the NIC team so that traffic can be prioritized

- TCP Chimney – TCP Chimney offloads the entire networking stack directly to the NIC so it is not compatible with NIC Teaming

- 802.1X authentication – 802.1X is generally not supported with NIC teaming and so should not be used together

Ready to get your data back?

To start recovering your data, documents, databases, images, videos, and other files, press the FREE DOWNLOAD button below to get the latest version of DiskInternals VMFS Recovery® and begin the step-by-step recovery process. You can preview all recovered files absolutely for FREE. To check the current prices, please press the Get Prices button. If you need any assistance, please feel free to contact Technical Support. The team is here to help you get your data back!

Here is how to configure a Windows Server 2016 NIC Team

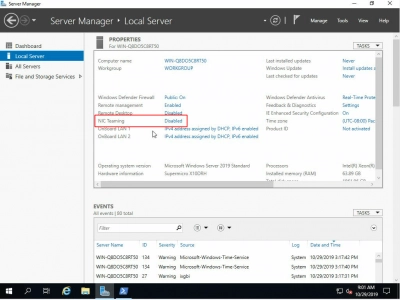

Using the Server Manager or PowerShell, you may quickly configure a NIC Team in Windows Server 2016. You'll find the NIC Teaming area under the Local Server settings screen, which is set to Disabled by default. Go to the disabled hyperlink and click it.

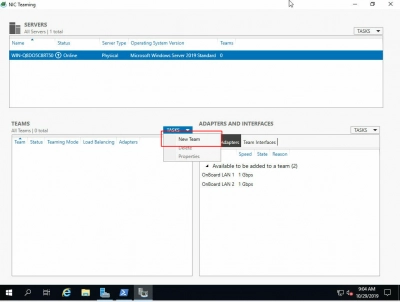

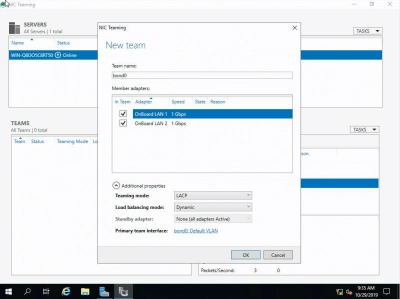

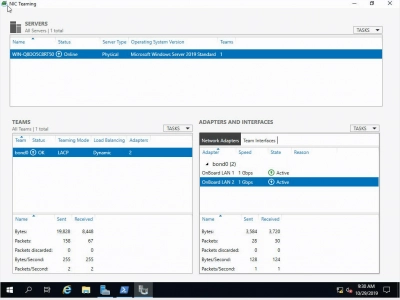

This will open the NIC Teaming Configuration dialog box, which will allow you to create a new NIC Team. Pull down the Tasks dropdown menu and pick the Add to New Team option for the NICs you wish to add to the team.

The NIC Teaming dialog box appears. You may give the NIC Team a name, check or uncheck adapters to make them members of the team, and set Additional Properties here. Select Additional Properties from the dropdown menu.

Select Teaming mode, Load Balancing mode, and Standby adapter/Primary team interface from the Additional Properties menu.

You may regulate how the network cards are paired using the various teaming options. The possibilities are as follows:

- Static Teaming — In this arrangement, the switch and server must be configured to determine which connections make up the team. The switch-dependent mode of NIC teaming is named after the fact that it is a static setup involving the switch.

- Switch Independent NICs — Allows you to distribute NICs across several network switches in your environment.

- LACP is a smart kind of link aggregation that allows for failover and dynamic configuration, allowing for automated aggregation setup. It also supports intelligent link failover. It accomplishes this by transmitting specific PDUs over all of the LACP-enabled lines.

You also have a few intriguing choices in the Load Balancing Mode:

- Source and destination TCP ports, as well as source and destination IP addresses are included in the address hash. The TCP ports hash creates the most granular traffic stream distribution.

- Even if a NIC fails, Hyper-V VMs will be able to access the network via this mechanism. Hyper-V Port — The VM load balances traffic in this load balancing mode. Each VM will use a separate NIC to communicate. Network connectivity with a VM will be interrupted if a NIC fails.

- Dynamic - Takes elements from each of the previous modes and merges them into a single mode. The distribution of outbound traffic is based on a hash of TCP ports and IP addresses. Inbound traffic is distributed similarly to Hyper-V port mode.

Here are all Hyper-V Specific NIC Teaming Considerations

When implementing NIC teaming in Hyper-V, there are a few things to keep in mind. VMQ, or Virtual Machine Queue, is one of the first things to think about. VMQ is a performance-enhancing technique that uses Windows Server's Direct Memory Access (DMA) features to allow the virtual machine to communicate directly with the NIC to transmit network data.

The converged network paradigm was introduced with Windows Server 2012 and Hyper-V, which, when combined with NIC teaming, allows various network services to be placed on a single team of NICs. The storage network, which is still suggested to be segregated from the other forms of traffic in a Hyper-V cluster, is the lone exception to traffic consolidation.

- Windows Server NIC teaming is designed to work with VMQ and can be enabled together

- Windows Server NIC Teaming is compatible with Hyper-V Network Virtualization or HNV

- Hyper-V Network Virtualization interacts with the NIC Teaming driver and allows NIC Teaming to distribute the load optimally with HNV

- NIC Teaming is compatible with Live Migration

- NIC Teaming can be used inside of a virtual machine as well. Virtual network adapters must be connected to the external Hyper-V virtual switches only. Any virtual network adapters that are connected to internal or private Hyper-V virtual switches won’t be able to communicate to the switch when teamed and network connectivity will fail

- Windows Server 2016 NIC Teaming supports teams with two members in VMs. Larger teams are not supported with VMs

- Hyper-V Port load-balancing provides a greater return on performance when the ratio of virtual network adapters to physical adapters increases

- A virtual network adapter will not exceed the speed of a single physical adapter when using Hyper-V port load-balancing

- If Hyper-V ports algorithm is used with Switch Independent teaming mode, the virtual switch can register the MAC addresses of the virtual adapters on separate physical adapters which statically balances incoming traffic

VMFS Recovery: protect your data

To protect your data, you can export the virtual machine to another device. That is, you will have a complete copy of an existing virtual machine, including virtual hard disk files, virtual machine configurations, and Hyper-V snapshots. This process is not fast and can take several days. In addition, it does not use encryption, compression, deduplication, and encryption methods, which does not allow more efficient use of available disk space.

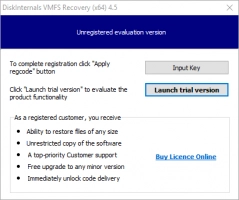

Tip: Discover the difference between Hyper-V and VMware recovery!You can also opt for the DiskInternals VMFS Recovery application, which will recover missing, inaccessible VMDK files in their native state in minutes. Data recovery occurs either manually or automatically using the Recovery Wizard. The recovered information is exported to local or remote locations (including FTP), and any virtual disk can be converted to local for easier and more convenient access (for example, in Windows Explorer). Another feature available is Unicode support.

FREE DOWNLOAD Latest version, Win GET PRICES Check now!

Please read these instructions for using VHD recovery tool, and then you can start recovering files:

Please download this application from the DiskInternals website and install it on your device.

After launching the application, if necessary, connect via SSH, open the disk and start scanning.

After that, VMFS Recovery will find all the VMDK files and mount them into one VMDK file.

Then check the recovered files for their integrity - it's a must, and it's free.

If everything suits you, it's time to buy a license and export all found or certain files at your discretion to another storage device.

Conclusion

By using NIC teaming, Windows Server and Hyper-V Clusters may take advantage of the natively built-in network aggregation features. Multiple physical adapters can be joined to form the fabric for a software-defined network adaptor via NIC teaming. Using Hyper-new V's converged networking approach, network traffic from several Hyper-V cluster needed networks may be consolidated into a single NIC team. Administrators have a number of choices for controlling how the actual team is created, as well as the load-balancing method that is utilized to load balance traffic. Each of these arrangements has advantages and disadvantages, especially when it comes to Hyper-V virtual machines.

Each of these arrangements has advantages and disadvantages, especially when it comes to Hyper-V virtual machines. Keep in mind that NIC teaming is incompatible with several sophisticated network technologies such as SR-IOV and 802.1X. Make use of the built-in Windows NIC teaming functionality, which was introduced with Windows Server 2012 and has shown to be far less troublesome than third-party drivers while also being fully supported by Microsoft.